I've always been a huge fan of Spotify's Rewind videos, and as the end of the year was approaching, I thought we should do something like this for Oniri: a popular dream journal on IOS that I've been working for these past months.

In this post, I'd like to describe the whole process, including the cost of rendering so many videos (we even put Render's infrastructure under pressure!).

Hopefully this can be a guide for anyone who'd be curious to do something like this. I would also be very interested in some critical feedback on how we could have done it better.

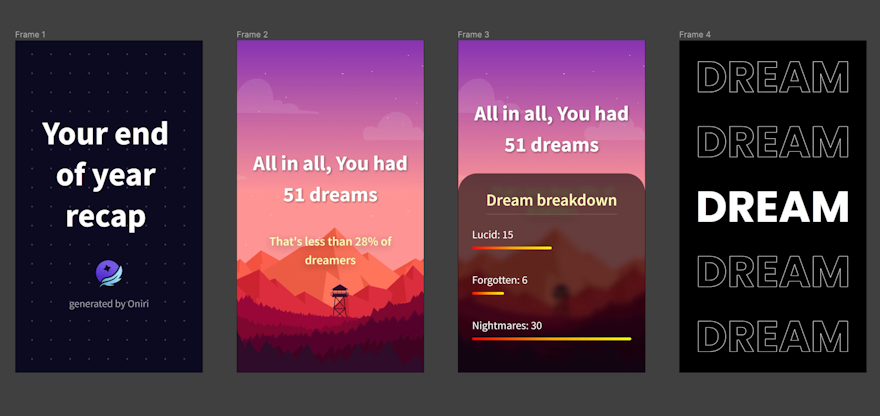

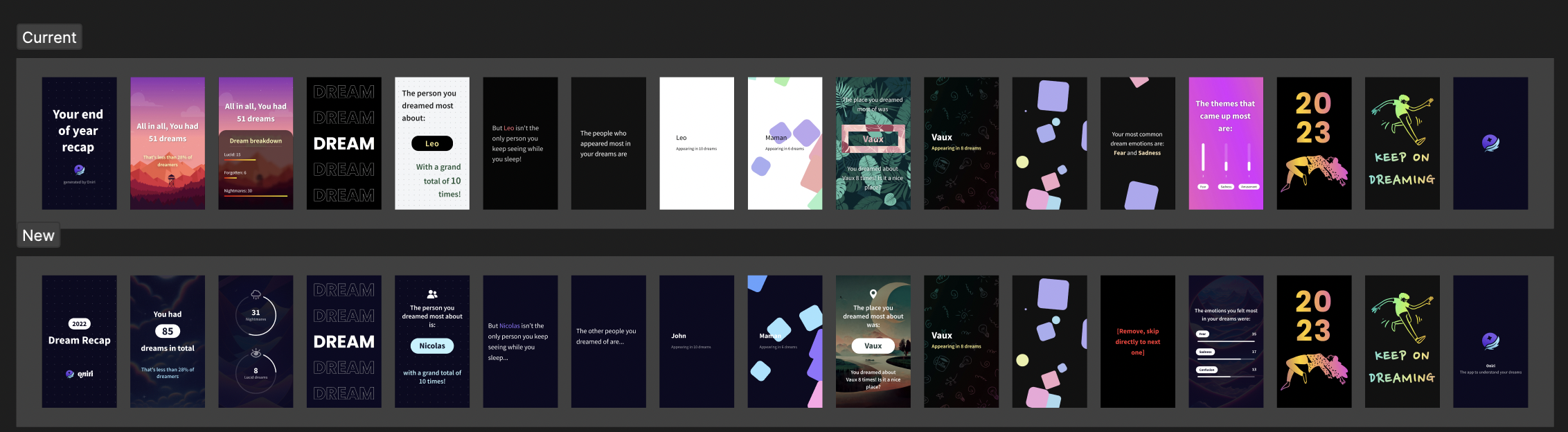

Designing the video

For us, the first step of the process was to design the video: what screens to show, what data to display, how to display it and so on.

We used Figma for this, and worked together to come up with a set of screens we were all happy with.

Then, we used Remotion to turn those screens into motion, giving them data in json format as input.

Having completed the Javascript script to render the video, we knew what kind of data we'd have to extract for each user. Namely, a list that looked something like that:

[{"dreams": {"total": 72, "difference": 62, "lucid": 20, "nightmares": 5}, "persons": [["John", 10], ["Mom", 9], ["Dad", 9]], "places": [["Swimming Pool", 3], ["Elevator", 3], ["School", 2]], "emotions": [["Friendship", 10], ["Agitation", 9], ["Relief", 8]]}]

Generating the data

Now started the complicated part: we'd have to skim through the different tables in the database and count how many dreams the user had this year, what places, people and emotions where most present, and compare that data to the average.

All in all, we had to go through about 5 tables for each user.

We knew we wouldn't do individual queries for each element and run synchronously through our user base.

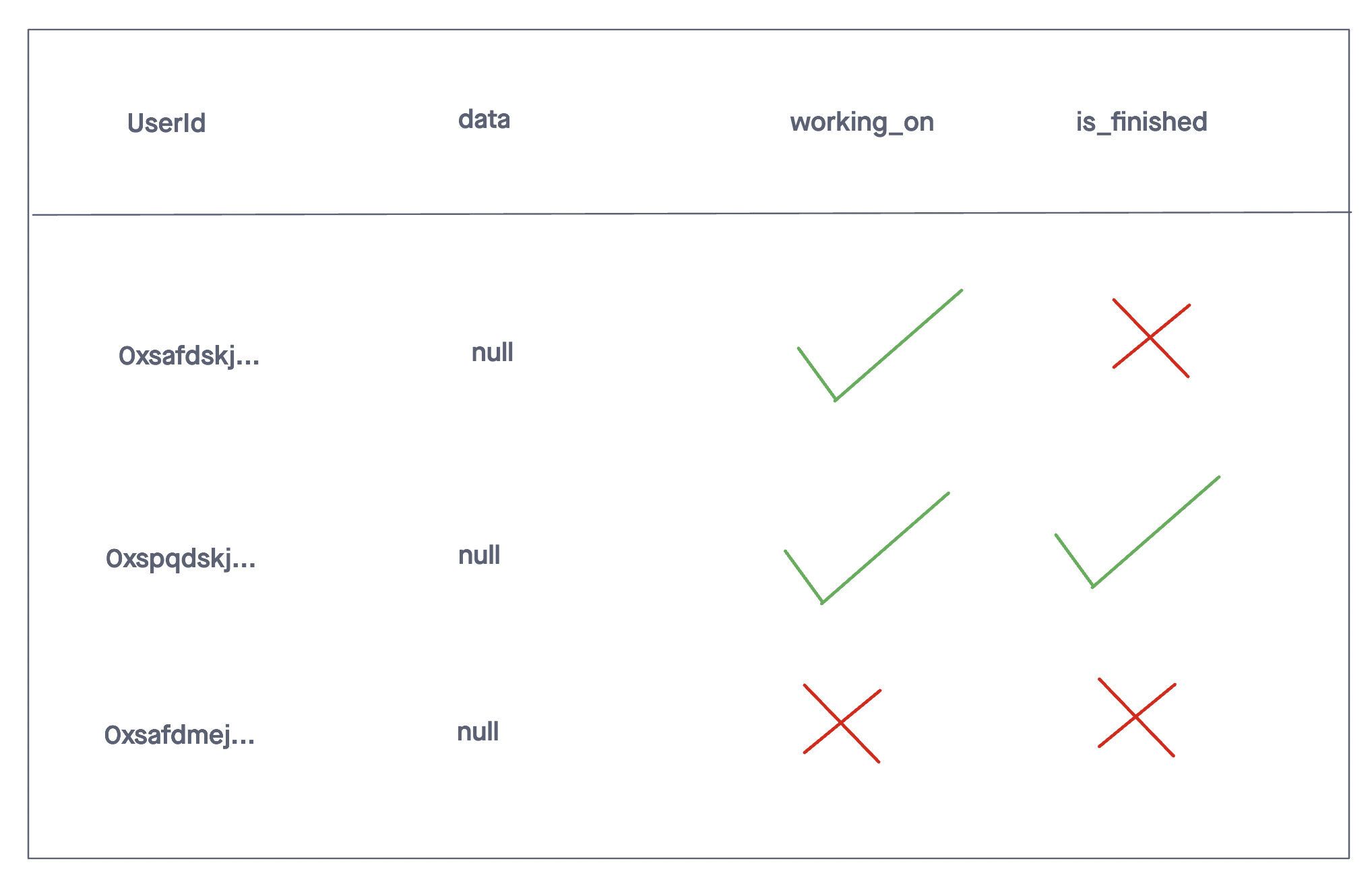

Instead, we created a new table that would contain one row per user, a working_on and is_finished boolean, and a data column for the json array.

We then launched about 30 workers, that would each fetch a few hundred rows, do one huge query to gather the data from all the users locally, and then iterate through the fetched rows and get the users' data from the big data dictionary. It looked something like this:

# one big query to get all the users data and store it locally

emotions_dict = get_all_emotions_from_users()

places_dict = get_all_places_from_users()

...

places, emotions, ... = get_individual_user_data(user_id, emotions_dict, places_dict)

This meant we would not overload our main database, and that the workers could work in parallel. This process took about 30 minutes for all 30'000 users, so pretty fast.

At the end, we realised that many of our users had maybe written down one or two dreams, and that we would not have enough data for those users, so we decided to drop those users.

Rendering the videos

Then, it was time to use our previously created Remotion script at scale to generate videos for all those users. We set working_on and is_finished to false for all our rows again, and got started.

Similarly to how we generated the individual json file for each user, we spun up progressively more node servers who would each do the following:

- grab a row from the database that wasn't finished and mark it as being worked on.

- render the video (this would take about 10 minutes per video).

- upload the video to an S3 bucket.

- mark the video as being finished in the database.

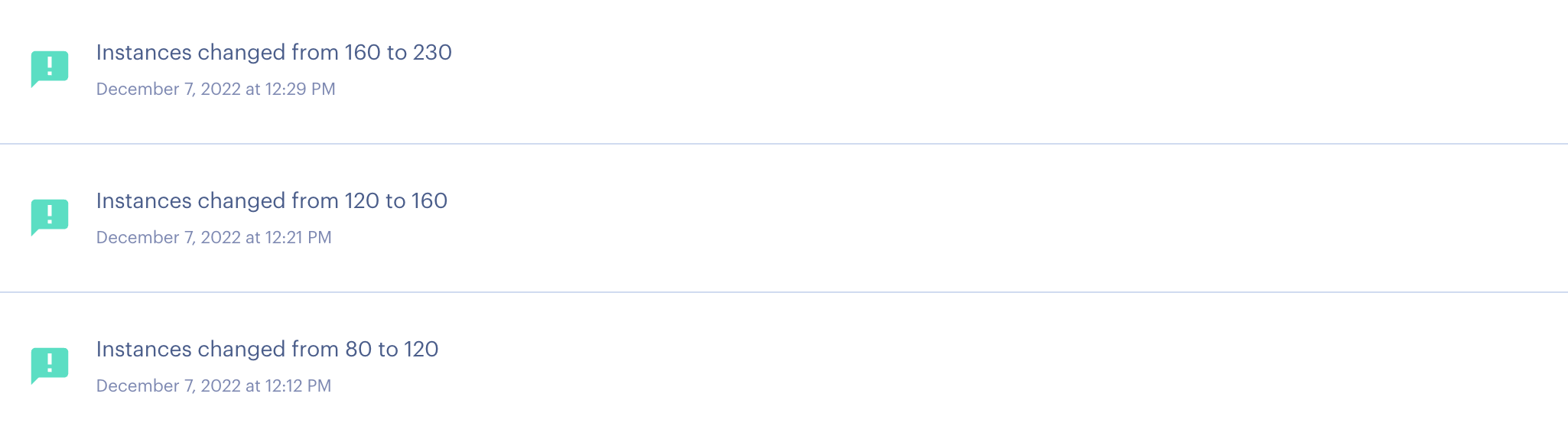

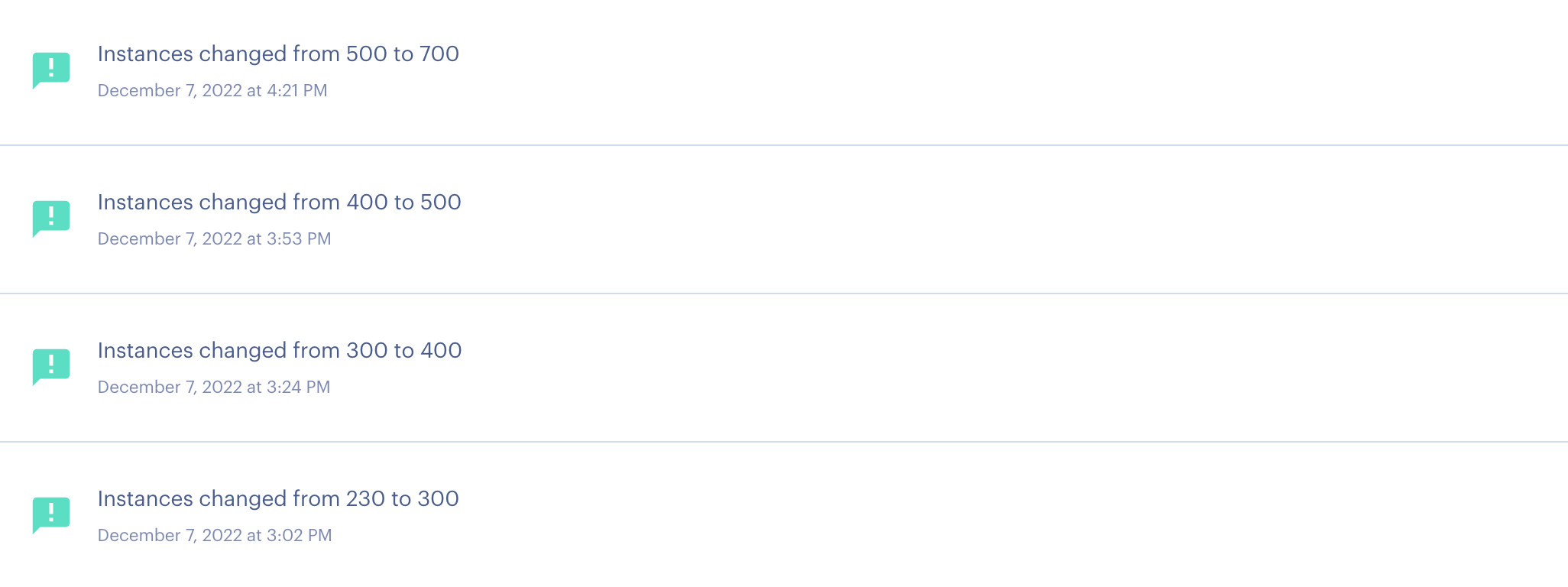

We started with a few machines, and progressively scaled up to a few hundred concurrent machines.

It was a lot of fun scaling up those machines and when I realised that I could just be done in an hour or two by scaling up the Render instances even more, so I did just that..

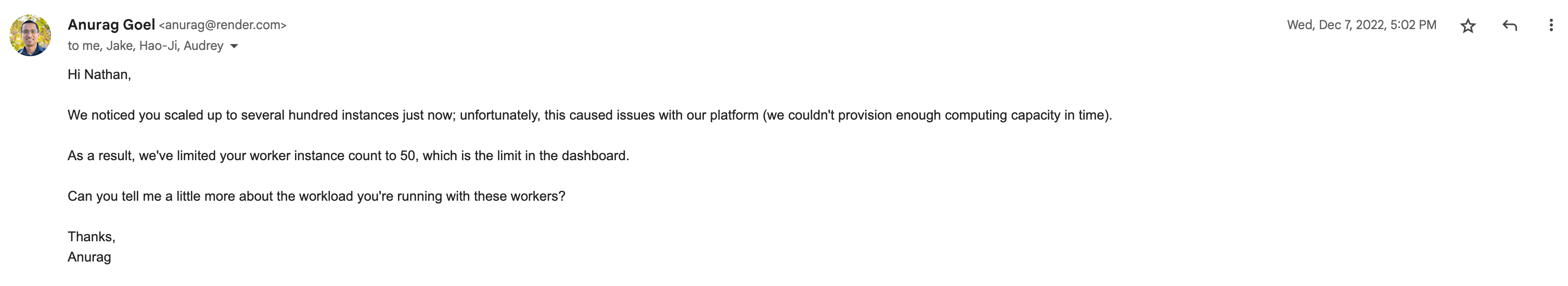

Thirty minutes later though, I received an email from Render's DevOps team: we were breaking their infrastructure.

So we slowed down, and finishing the rendering of all the videos about 12 hours later. A lot of fun!

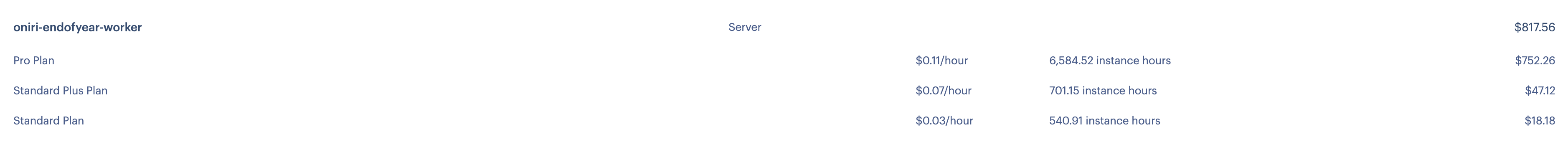

All in all, the fun cost us about $800, for a total of 7'000 instance hours.

Conclusion

This was a lot of fun and our users loved it. We saw some users share the video and did see a spike in sign-ups a few days after releasing the videos.

Still, I'm not sure we got $800 worth of sign-ups (as compared to doing ads). The benefits of something like this are probably more subtle: retention increase, advocacy, ...

We'll for sure do it again next year!

I hope you enjoyed this post!

Nathan